Noah here. The conversation around driverless cars has ebbed and flowed over the last few years. Things seem to have kicked off in earnest in the mid-2000s with the DARPA Grand Challenge. By 2010 The New York Times was reporting that “seven [Google] test cars have driven 1,000 miles without human intervention and more than 140,000 miles with only occasional human control.” From there things went wild with Uber announcing a significant investment in autonomous vehicles in early 2015, Tesla activating “Autopilot” mode a few months later, a team of Google self-driving experts starting their own autonomous trucking company called Otto a few months after that, and Google spinning off Waymo, its self-driving unit, at the end of 2016. It felt like we were all only a few years away from never having to control a car again.

That’s not to say all those cars were the same. Autonomous vehicles are “graded” 0 (no automation) through 5 (full automation). Tesla’s Autopilot feature, for instance, is level two (“The car can steer, accelerate, and brake in certain circumstances.”). The autonomous Uber that killed a pedestrian in Arizona in 2018 was level three (“In the right conditions, the car can manage most aspects of driving, including monitoring the environment. The system prompts the driver to intervene when it encounters a scenario it can’t navigate.”) Since that accident, along with a series of other challenges with the technology, serious questions have emerged about the timeline for level five automation, with even the CEO of Waymo suggesting it’s decades away.

At the center of much of this autonomous conversation is someone named Anthony Levandowski. He was part of those Grand Challenges and eventually went on to lead the hardware team of the self-driving project at Google. He’s also the one who led the exodus from Google to start Otto. As part of that transition he may or may not have walked off with “fourteen thousand files, including hardware schematics,” according to an excellent New Yorker story from Charles Duhigg. Uber later bought Otto and found itself in a lawsuit with Google, which was eventually settled for 0.34% of Uber (worth around $250 million at the time).

One of the key technologies in all this is LiDAR, which uses a laser to identify objects. It is a critical component of autonomous vehicles as it can produce a 3D image of the surroundings, complete accurate size and distance measurements for objects, something you can’t get with high accuracy with a 2D camera image alone. But it’s complicated. Tesla’s Autopilot doesn’t use LIDAR and Elon Musk has been a vocal critic of the technology, calling it expensive and unnecessary. Google’s self-driving project relies on LIDAR and Levandowski helped develop the technology there, so it was surprising to hear that he’s changed his tune on the tech recently:

“Cars still do dumb things even with perfect information” about what is around them, he said. The real problem is predicting what others—including cars and pedestrians—are going to do. “Even if you can see it, it’s not enough. You need to have proper reasoning.” In other words, a “fundamental breakthrough” in AI is necessary to move self-driving car technology forward, he said. “The existing, state-of-the-art [software] is not sufficient to know how to predict the future” of objects around the vehicle.

Why is this interesting?

I sent this to a friend who is deeply embedded in this world to get his take. He sent me back the following, though asked not to include his name:

Safety critical systems should have redundancy. At this stage in autonomous vehicle (AV) development, multiple sensing modalities — camera, lidar, radar — provide redundancy because the sensors have trade-offs. Camera has high pixel density for classification of objects, but doesn’t directly measure distance to objects and suffers from poor performance in low light. LiDAR and radar measure distance directly and work fine in low light, but have low pixel density.

The 3D detection literature shows best detection performance using inputs from multiple sensors, including LiDAR, as you might expect given these trade offs. So the sensible thing to do at this stage in the industry is to use all three sensors, both from a safety and performance perspective. With this in mind, nearly all companies working on Level 4 / 5 autonomy (e.g. Aurora, Uber, Waymo, etc) use camera, radar, and LIDAR together in different ways.

But Musk is stuck. He’s supposed to ship AV on this massive fleet of existing cars, all of which only have radar, camera and small ultrasonic sensors (presumably because LiDAR isn’t cheap enough or durable enough to scale yet). So he has to argue that the problem is solvable with the vehicles that he has already sold. And this is why he tries to dismiss the value and relevance of LiDAR. But he’s not arguing it from first principles or the state of the art results in detection, which point to fusing the various modalities — including LiDAR — together. He’s arguing it under the self imposed constraint to deploy level 4 / 5 autonomy on his prematurely scaled vehicle fleet. The cart was sold before the horse. (NRB)

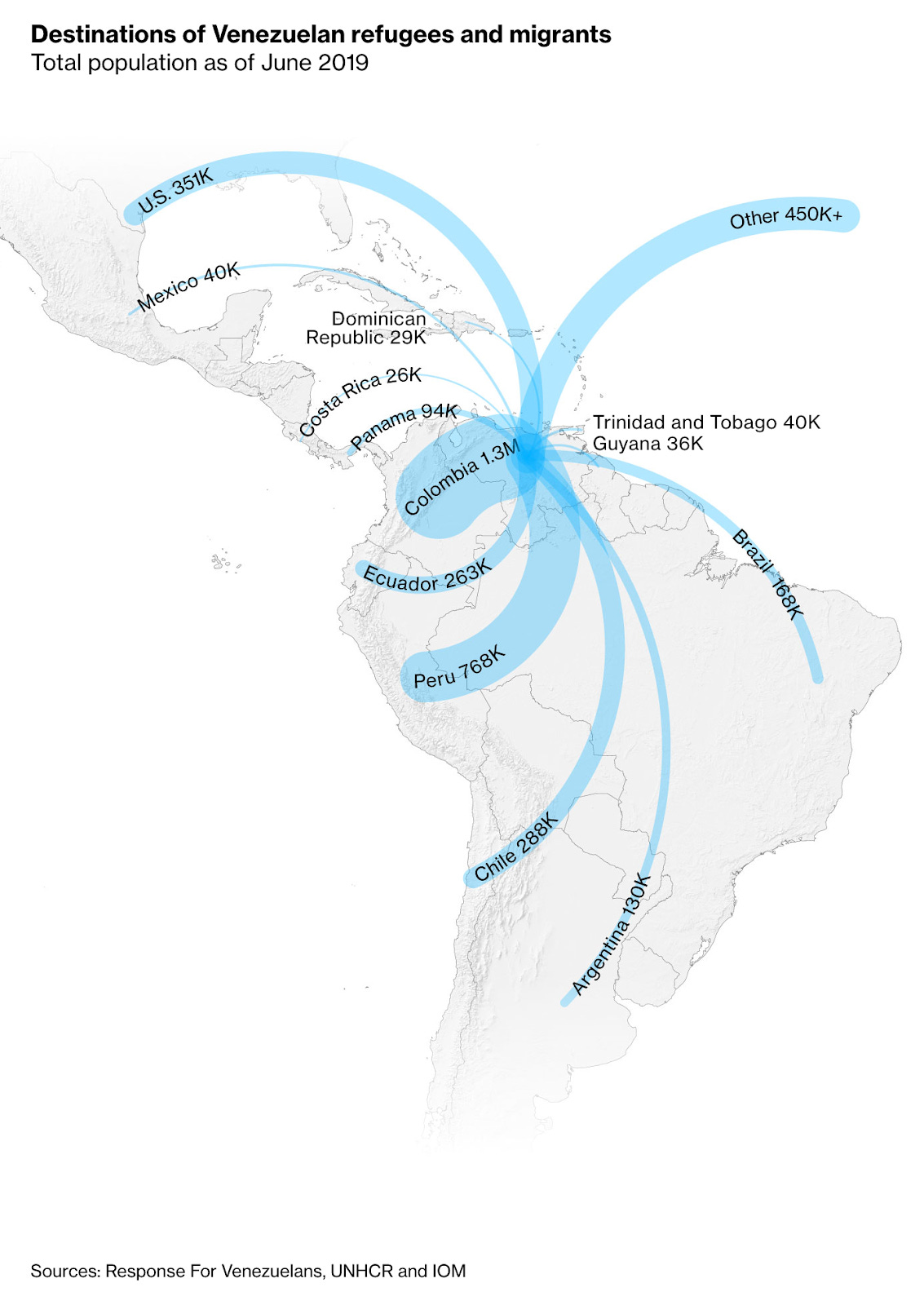

Map of the Day:

Via Bloomberg: “... the number of Venezuelans who have left the country hit 4 million. About 5,000 more are leaving the country each day, according to the UN High Commission for Refugees (UNHCR) and the International Organization for Migration. Most have ended up in neighboring Latin American countries, straining those countries’ infrastructure and job markets unprepared for a rapid population surge.” To put that number in context, the population of Venezuela is currently around 32 million. (NRB)

Quick Links:

Here’s some CJN clickbait if I ever saw it: FT on “Brain Machine Interfaces”. “But for now, consumers are taking advantage of a new wave of bluetooth headsets that let them probe the brain’s electrical circuitry at home, as they seek inner calm, improved athletic performance or just a good night’s sleep.” (Previously: CJN WITI editions on adaptogens and nootropics.) (NRB)

The airport is the new mall: “For the first time last year, Estée Lauder Co. generated more revenue at airports globally than at U.S. department stores, which for decades had been beauty companies’ biggest sales driver. Other luxury-goods companies, from spirits maker Bacardi Ltd. to Kering SA’s Gucci, are also expanding their presence at airport terminals.” (NRB)

Background on Polestar, the EV performance brand from Volvo. (CJN)

Thanks for reading,

Noah (NRB) & Colin (CJN)