Why is this interesting? - The Content Moderation Edition

On social media, content moderation, and the abuse problem

Noah here. There’s a thread in this conversation between Charlie Warzel and Sarah Jeong about YouTube’s policies that’s been nibbling at my brain for awhile now. It’s about how YouTube writes rules of conduct as if they’re going to be enforced in black and white and then treats them more like standards or principles.

Why is this interesting?

I was thinking about this again after listening to a recent Reply All episode on the harassment of Carlos Maza, a gay YouTube video producer. After catching the ire of conservative YouTuber Steven Crowder, Maza became the target of abuse. In response he put together a video to display what he saw as clear evidence that the site’s rules around language and harassment had been broken. At first YouTube said Crowder hadn’t violated the site’s rules and then later backtracked and “demonetized” Crowder but allowed him to stay on the site (a fairly toothless punishment, as Maza points out). As Warzel explains, this actually only makes things worse:

The end result is that YouTube loses all trust, makes almost nobody happy and basically only empowers the worst actors by giving them more reason to have grievances. Even when YouTube does try to explain the logic to its rules, as it eventually did this week, it inadvertently lays out a map for how bad actors can sidestep takedowns, giving bad faith creators workarounds to harass or profit off bigotry. You know you’re in a real dark place when the good decisions YouTube makes are equally troubling.

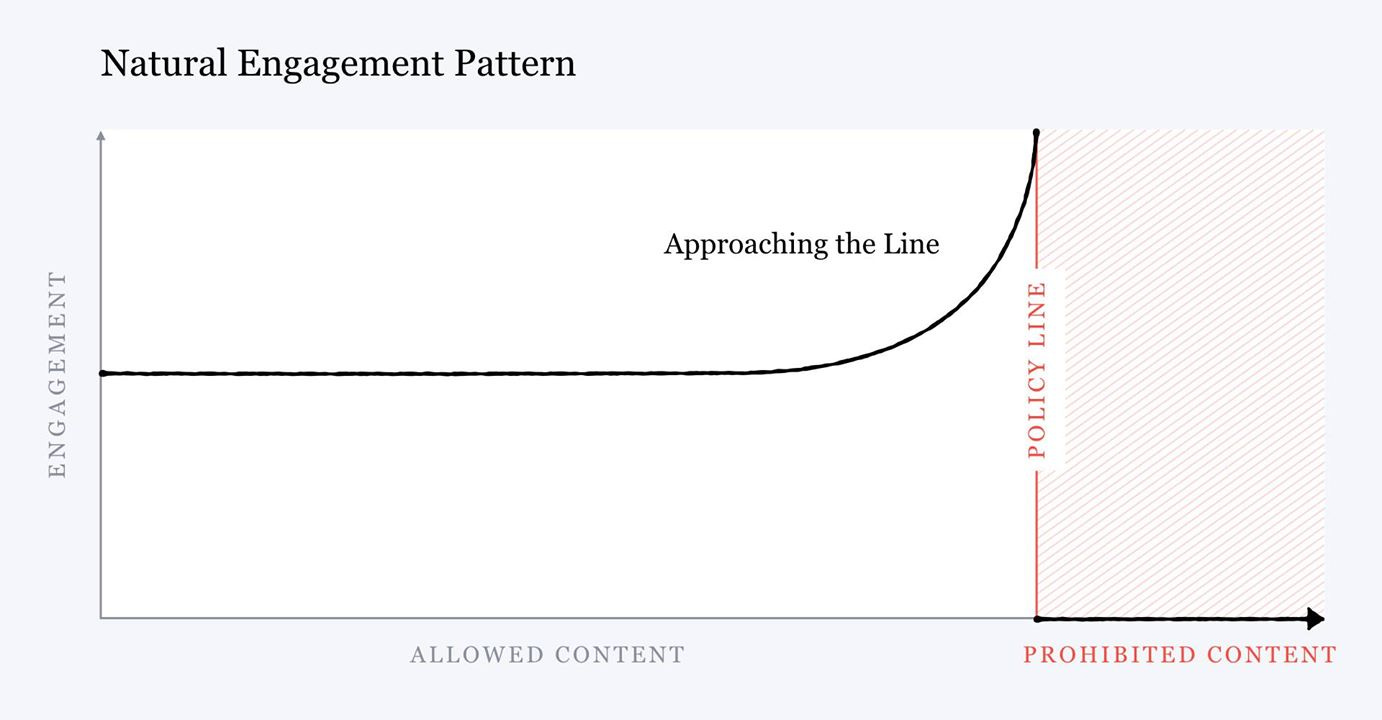

Mark Zuckerberg echoed this in a post he wrote last November about the content moderation problem. The closer someone gets to the policy line, he explained, the more extreme the content gets. Here’s how it was illustrated:

So what’s the answer? No one knows exactly. In the past this was partly dealt with through the governing of public airwaves (and the laws that could be imposed as part of that) and partly by the extreme cost of being a broadcaster (and the market forces that could be imposed as part of that). But this is a different kind of problem and, unfortunately, every time the platforms try to make it better they only seem to dig the hole deeper for themselves. Warzel points to a Tweet by friend of WITI Felix Salmon (expanded upon here) who suggests that the only possible path is to move away from rule-based regulation and focus on principles. That’s the point Jeong is making as well (while they’re using different legal language, I think they mean roughly the same thing).

You know who else seems to agree? Mark Zuckerberg. A November post he wrote kicked off research around building out an independent body to help moderate content on Facebook. They’re still working out the specifics, but it sounds like its heavily inspired by the way the legal system works in the United States. What’s more, he acknowledges that it will be a mix of rules, standards, and principles, applied by various systems in various ways (AI is particularly good at flagging rules violations, for instance). Here’s Zuckerberg from a conversation he did with legal scholar Jonathan Zittrain at the beginning of the year (I wrote about some of the ideas in WITI 6/6 - The Information Fiduciary Edition):

We're never going to be able to get out of the business of making frontline judgments. We'll have the AI systems flag content that they think is against policies or could be, and then we'll have people-- this set of 30 thousand people, which is growing-- that is trained to basically understand what the policies are. We have to make the frontline decisions, because a lot of this stuff needs to get handled in a timely way, and a more deliberative process that's thinking about the fairness and the policies overall should happen over a different timeframe than what is often relevant, which is the enforcement of the initial policy. But I do think overall for a lot of the biggest questions, I just want to build a more independent process.

Just last month Zuckerberg did an entire conversation on just the oversight board with two more legal scholars. It was released in conjunction with Facebook publishing a 44 page report outlining what they learned researching the idea over the last six months. While on the one hand it’s easy to conclude that Facebook wants to create both its own currency and legal system, on the other it’s a laudable acceptance that algorithms aren’t going to get us out of this quagmire. (NRB)

Mix of the day:

This is a masterful demonstration of DJing, turntablism and inspiration from Jeff Mills. Even better to watch it on video to see the nuance behind the gestures and every practiced movement to create the live mix. (CJN)

Quick Links:

Was reminded recently of this excellent David Grann piece from 2003 on the tunnels that pipe water into New York City. (NRB)

Scientists figured out how to get Toxoplasma gondii (Toxo) to grow in mice. That’s a big deal because Toxo, the parasite that reproduces in cats and can make people crazy, has been hard to study as a result. Also has this fun side effect: “Science journalists are often mocked for describing preliminary discoveries in mice that may or may not translate to humans. There’s even a Twitter account—@justsaysinmice—that retweets overhyped news stories with “IN MICE” appended overhead. It’s delightful, then, to write about a study in which doing something in mice is the entire point.” (NRB)

Archive.org keeps a collection of speed run videos (“trying to complete a videogame in the fastest time possible”). (NRB)

Thanks for reading,

Noah (NRB) & Colin (CJN)